Away Mission: Sem-Tech 07 Conference, May 2007, San Jose, CA

The outline of next generation web technology is sharper: Web 3.0 is taking shape... today.

This was the third Sem-Tech conference and it was gratifying to see tools and projects emerging and even some early products hitting the new market for Semantic Technologies. The conference focused on both research and the commercialization of ontologies and reasoning tools. It had Tutorials, keynotes, tech sessions and panels. And many developers who had previously discussed novel ideas were now demoing working betas.

This was something of a coming out party for semantic startups and venture capitalists. Besides more business people on panels, poster sessions for academic and open source projects, and informal meetings in the corridors, it was clear that a lot of research was coming out of the lab and becoming productized or open-sourced. Even a giants like Yahoo proudly demoed beta web sites using its Webby version of sematic technology.

(Note: The second Sem-Tech conference was reviewed in LG issue #129, and that article contains definitions and discussions of the underlying technologies. Please refer to it for the terminology of semantic technology [OWL, RDF, etc].)

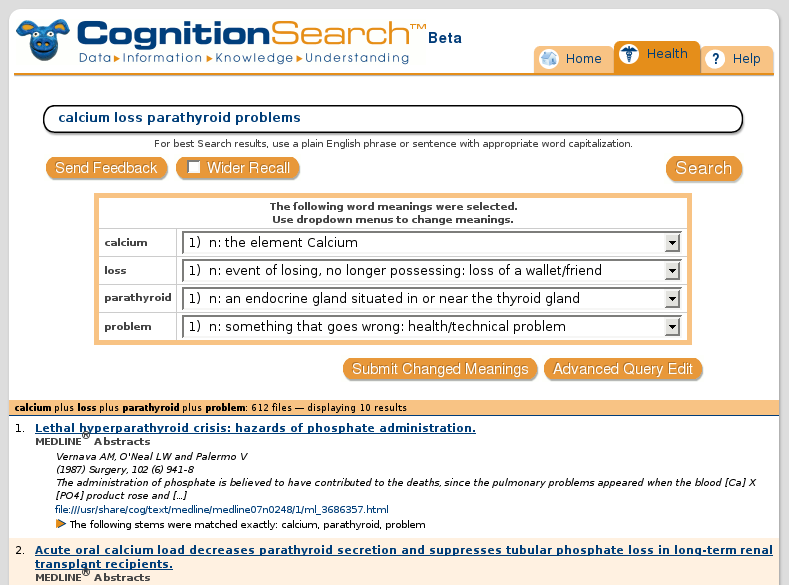

One example of products slipping into mainstream websites is CongnitionSearch, which is currently used as the advanced search engine at the Lexis-Nexis Concordance service. Dr. Kathleen Dahlberg, a professor at UC Santa Monica and CTO and founder of Cognition, presented a session on the underlying technolgies entitled "Improving Precision and Recall with Lingusitic Semantics".

Cognition Technologies is a search-technology company that has created a meaning-based evolution in text searching. Its patented architecture, known as CognitionSearch, is able to deliver significantly more precise results, with far greater recall, than currently used technologies. In the Medline Demo I saw, a plain text question was parsed by the back end and the user was presented with a small pull down menu to select the correct knowledge domain. The results listed were quite relevant and found word matches not in the original question [!] - e.g., a question on Hogkin's disease found articles on lymphoma ranked near the top. This is due to using taxonomies to process general as well as specific concepts, so a search for "vehicle accident" will find items on cars, buses, boats, etc.

Here is a partial screen snapshot of what the Cognition interface provides:

To view a demonstration of Cognition's technology, please visit www.CognitionSearch.com. Cognition has C++, Java, Perl, Python and Ruby APIs.

"The answer to your question is..."

Now let's take a look at the semantic tech efforts of the federal government and university research supported by the Disruptive Technology Office (formerly known as ARDA (Advanced Research and Development Activity) - and I am not kidding). Many of these projects still list themselves as ARDA-supported. Although some of the funding comes from those notorious 3 letter agencies, most of this work was never classified and is accessible to the general public.

The revelation here is that ARDA/DTO projects have made significant headway

and have solved, or are solving, many difficult problems in knowledge

representation and machine reasoning. This was the point of a dense

presentation by Dr. Lucian Russell titled "Advanced Intelligence Community

R&D Meets the Semantic Web!"

"Look, the world's greatest minds tried to bring together language and

logic for 50 years and failed. Now it is possible, so as this changes

everything...." Russell went on to describe 4 developments that enables

this paradigm shift, "It starts with English (i.e. WordNet) because that

project (http://wordnet.princeton.edu/)

defines the most common word meanings in the English language. Its database

is the semantics of English." The key here is that, due to WordNet,

machine-mediated semantic analysis is possible.

Besides the availability of a well-defined vocabulary database, ARDA has

produced these technologies:

-- IKRIS, for the representation of

domain-specific knowledge and inter-domain translation

--

AQUAINT, the system used for Advanced Question Answering for

Intelligence

-- TimeML, a markup language for Temporal and

Event expression [http://www.cs.brandeis.edu/~jamesp/arda/time/]

According to Russell, prior to the AQUAINT projects, software systems could not resolve issues involving persistance and temporality, and thus could not read English documents. This lead to the development of TimeML, allowing time stamping, event ordering, and reasoning about processes occurring over time, an essential part of real-world knowledge representation - that is, actions described by verbs.

All of these efforts matured and came to fruition at the end of 2006, and mark a significant advance in semantic interoperability. Together, it is now possible to describe scentific knowledge in language and with logic that is machine readable.

AQUAINT allows a system to answer questions formulated in English based on the content in a repository. For this to occur, the system must parse and understand the question and its relationship to the repository and then find answers in that repository.

Javelin, a multilingual offshoot of AQUAINT being developed at CMU, is currently answering English questions from repositories in Chinese and Japanese. Recently, that research has broadened to questions that involve reasoning about relationships ("How did Egypt acquire SAMs?") and questions that are answered from multilingual sources.

IKRIS has the goal of providing for translation of Knowledge Representations (KRs) from different domains and different contractors. It does this by contextualizing these KRs and and allowing translation of scenarios to support automated reasoning. More specifically, a major goal of IKRIS is to represent knowledge that is relevant to intelligence analysis tasks in a form that enhances automated support for analysts.

"IKRIS is a logic system that encompasses all of the work the W3C did in the OWL language, all of the ISO work on common logic (CL) [http://en.wikipedia.org/wiki/Common_Logic] and extends that to non-monotonic logic, the logic of scientific discovery," Russell said.

A fuller description of AQUAINT and scenario-based analysis is here: [http://languagecomputer.com/hltnaacl04qa/HLT-NAACL-QA-Wkshp-May-04-keynote.pdf]

In 2005 the federal government stated that Semantic Interoperability is a goal. This quote comes from Chapter 3 of the federal Data Reference Model (DRM) documentation: "Implementing information sharing infrastructures between discrete content owners (even with using service-oriented architectures or business process modeling approaches) still has to contend with problems with different contexts and their associated meanings. Semantic interoperability is a capability that enables enhanced automated discovery and usage of data due to the enhanced meaning (semantics) that are provided for data."

"The federal DRM stated that Data Description and Data Context files should be created to enable this sharing but did not say how this would happen," explained Russell. "That was because in 2005 there was no way to specify precisely what "enhanced" meant. As of May 2006, due to the advances by ACQUAINT, there now is. Most important are the results from IKRIS."

One of the benefits resulting from these capabiities is a new relevance for data descriptions and DB documentation - a stepchild in the current IT world and thus a black hole in the IT budget. These are tedious tasks often left undone, or significantly out of date, and hardly anybody would invest time to read these descriptions. But now semantic and linguistic technology can automate the conversion of DB documention into knowledge bases and answer quesitions expressed in natural English - and these can be used to create SOAs and to audit IT policy. A Brave New World is coming...

To achieve this knowledge automation, Russell gave Linux Gazette the following prescription: "The way forward is simple: write precise descriptions of data collections using English. The semantics of each word should be chosen from the meanings of the word in WordNet, augmented as needed. For databases with schemas, the meanings of all data attributes should be described in terms of the processes generating the data values that are stored in the database. Then input the descriptions to a software tool that extracts logical relations from the text." There are several of these arriving on the market and in OSSw projects.

Russell was a co-author of the Data Reference Model, the Federal standard for sharing data. He has been involved with a number of unclassified R&D efforts supporting the Intelligence Community, and helped organize the Semantic Interoperability Community of Practice (SICoP) Special Conferences earlier in 2007.

Here is an earlier version of Russell's presentation as a PPT file from the

April SiCop Conference:

http://colab.cim3.net/file/work/SICoP/2007-04-25/LRussell04252007.ppt

Other Sem-Tech sessions

"I will not use the word semantics... if it's done right, no one will know it's there," offered Chuck Rehberg, chief scientist at Semantic Insights. He described their beta product that can read text bases, make inferences and create tailored reports for individuals. He produced reports on all of the presidential candidates based on RSS feeds, showing how semantic matching could generate a selective and relevant report.

Radar Networks, a startup currently in stealth mode, is building a cool next-generation semantic application (the only thing we know with any certainty is that it's not a search engine.) Nova Spivack, its founder, spoke of building end user applications to help groups manage knowledge on the web as a repository. This application is slated to go into an invite-beta status in Fall 2007.

Nova offered this insight: "With group information, the effort increases while the value of the information decreases... it's inversely proprotional to the number of users." The remedy, he suggested, is a layer of semantic tech to help groups manage their knowledge. Said Nova suggestively, "We all have different file systems, email systems, informal knowledge bases... We need to automate that and make sense of all the information."

Another insight: Radar, Vulcan, and some other semantic startups are receiving VC funding from Paul Allen's group. Allen, a co-founder of Microsoft turned technology incubator, is very interested in the potential of semantic tech to intelligently link information on the web.

One notion from a tech session on "Semantic User Experiences" by Ross Centers suggested a way to bridge the developing Web 2.0 universe with semantic technology, often called the future Web 3.0. Drawing a distinction between these approaches, we have a choice of 'tags' vs. formal ontologies (or, as an analogy, something built by web users vs. built by "teams of dwarves locked in mines"). Could the Wiki metaphor bridge the gap? Semantic wikis could act as a way for "crowds" of users to refine a shared ontology, suggested Centers.

Although there were optional tours of Adobe and Oracle to view their semantic products and meet their researchers, most attendees chose a full afternoon product seminar by TopQuadrant to conclude the conference. "Semantic Web Modeling and Application Development using TopBraid" was presented by Dean Allemang and Holger Knublauch of TopQuadrant. This mini-tutorial showed basic modeling in RDF-S and OWL and deployment of a semantic application using TopBraid Live.

Attendees built a simple mashup without having to use the underlying APIs of Google Maps and other sites. Instead, a pre-built ontology was linked to simple data files and back-end processes to graphically build the mashup. In other words, the user drove a knowledge-based tool to create a dynamic, AJAX-based application without programming. This is more than just another level of indirection, and it worked fairly well.

Here's a link to TopQuadrant: http://topquadrant.com/

Conclusions

The Semantic Technology conference scores well in having most presentations on the conference CD (including tutorials), having daily updates of missing presentations and printed tutorial handouts for almost all sessions.

It also scores high on the well-organized meals and snacks, although it could be a little more veggie-friendly. Some rooms for sessions had power cords for attendees, but not the main hall, leading to fierce competition for the few wall outlets.

It looks like the conference proceedings may not be available to the public, since last year's CD is still a commercially-available product. Officially, they will be posting portions of the 2006 and 2007 Semantic Technology Conferences in July. These files will be found on www.aliceinmetaland.com and www.semanticreport.com.

However, at publication time, this link was still open and did not ask for an ID and password: http://www.semantic-conference.com/2007/handouts. Links to the conference tutorials are also available there, and this 188-slide tutorial introducing Sematics and Ontologies with an IT perspective is a good place to start: http://www.semantic-conference.com/2007/handouts/2-UpBW/T1_Uschold_Michael_2UpBW.pdf

Also, here is a link for printable versions of the 2006 presentations:

http://www.semantic-conference.com/Presentations.html

Here are the aggregate ratings [0-10]:

Venue: 6 -- Parking in downtown San Jose is cheaper than SF,

but you have to find it or use Light Rail.

Keynotes: 6 -- One was actually a panel

Session Quality: 7 -- As usual, you

have to search for the gems, but a near complete conference CD helped

Food: 7 -- sit-down meals with dessert

Conference Bag: 8 -- a real, multicompartment shoulder bag

Overall rating: 7

The next Semantic Technology Conference will be held May 18-22, 2008 in San Jose, CA.

Talkback: Discuss this article with The Answer Gang

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.

Howard Dyckoff is a long term IT professional with primary experience at

Fortune 100 and 200 firms. Before his IT career, he worked for Aviation

Week and Space Technology magazine and before that used to edit SkyCom, a

newsletter for astronomers and rocketeers. He hails from the Republic of

Brooklyn [and Polytechnic Institute] and now, after several trips to

Himalayan mountain tops, resides in the SF Bay Area with a large book

collection and several pet rocks.